WordPress has been known for its built-in search engine friendly features. However, it’s still not perfect. With these six plugins, every blog can easily maximize their search engine rankings:

1) Platinum SEO Pack: The plugin author claimed it is better than the famous All-In-One SEO plugin, as the ultimate WordPress SEO solution And I have to agree, this plugin has live up to his name.

2) Sitemap Combo (XMP Sitemaps & Sitemap Generator): Sitemap gets your website indexed faster. The combination of these 2 plugins will complete your blog’s sitemap.

3) Sociable: Social media is often the key to link building. This plugin adds sweet social bookmarking links at the bottom of your post.

4) Digg This: We are not over on social media yet. Digg this WordPress plugin is a plugin that detects incoming links from Digg.com to your WordPress post and automatically display a link back to the Digg post, for people to Digg your story. Cool.

5) Redirection: Broken links hurt. This plugin manages 301 redirections, keep tracks of 404 errors, and generally tidy up any loose ends your site may have.

6) Pagination: The plugin displays page numbers to replace the ‘previous/next page’. Great usability for the visitors, and gives search engines a bird eye access to all the pages.

7) Also check out Massive Domains

Bonus:

7) SEO Friendly Images: Search engine optimized your images too! This plugin automatically updates all images with proper ALT and TITLE attributes.

8) Increased Sociability: This is probably the best social media plugin ever: display a custom message to greet visitors that come from different social bookmarking website.

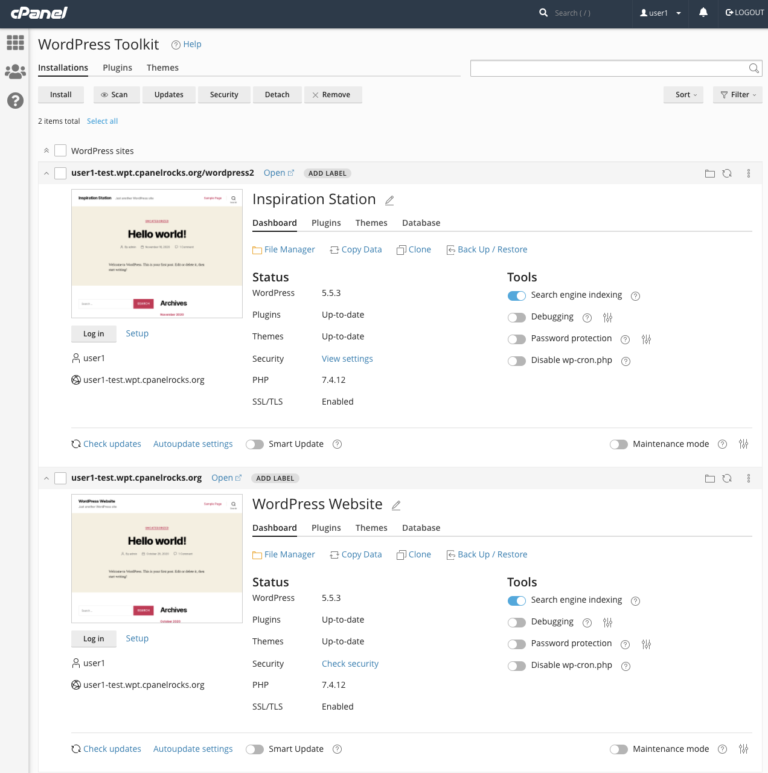

9) The Best Hosting For WordPress: Technically (or literally), this is not a plugin. But every blog that need the best SEO rankings need to be back by a stable and reliable web host. And you know where to look for you. 😉

6 Best WordPress Plugins To Maximize Your SEO Ranking

1. Yoast SEO

Overview

Yoast SEO is one of the most popular and comprehensive SEO plugins available for WordPress. It offers a wide range of features that can help you optimize your site’s content and structure for better search engine rankings.

Key Features

- Content Analysis: Provides real-time content analysis for SEO and readability.

- XML Sitemaps: Automatically generates XML sitemaps to help search engines index your site.

- Meta Tags Management: Easily manage and optimize your site’s meta tags.

- Breadcrumbs: Adds breadcrumbs to your site for better navigation and SEO.

- Social Integration: Integrates with social media platforms to enhance your social SEO.

Usage

Yoast SEO is user-friendly, making it suitable for both beginners and advanced users. The plugin provides suggestions for improving your content, such as keyword usage, readability, and internal linking.

2. All in One SEO Pack

Overview

All in One SEO Pack is another widely used SEO plugin for WordPress. It offers robust features to help you optimize your site for search engines without requiring advanced technical knowledge.

Key Features

- XML Sitemaps: Automatically generates and submits XML sitemaps to search engines.

- Meta Tags: Helps you automatically generate meta tags for your content.

- Social Media Integration: Supports social media integration for better sharing and SEO.

- Schema Markup: Adds schema markup to your site to improve search engine understanding of your content.

- E-commerce SEO: Optimizes WooCommerce sites for better search engine rankings.

Usage

All in One SEO Pack is easy to set up and configure, making it ideal for users who want a straightforward SEO solution. The plugin offers both a free version and a premium version with additional features.

3. Rank Math

Overview

Rank Math is a powerful WordPress SEO plugin that offers a comprehensive set of tools to help you improve your site’s SEO performance. It is known for its intuitive interface and advanced features.

Key Features

- Setup Wizard: A step-by-step setup wizard to configure SEO settings easily.

- Advanced SEO Analysis: Provides in-depth SEO analysis with actionable recommendations.

- Schema Markup: Supports multiple schema types to enhance search engine understanding.

- Keyword Optimization: Allows you to optimize content for multiple focus keywords.

- Google Search Console Integration: Integrates with Google Search Console for better insights.

Usage

Rank Math is designed for both beginners and advanced users. Its modular approach allows you to enable or disable features as needed, making it highly customizable.

4. SEOPress

Overview

SEOPress is a feature-rich SEO plugin that offers a range of tools to help you optimize your site for search engines. It is known for its simplicity and effectiveness.

Key Features

- Content Analysis: Provides content analysis with actionable recommendations.

- XML and HTML Sitemaps: Generates both XML and HTML sitemaps for better indexing.

- Meta Tags Management: Easily manage meta titles and descriptions.

- Social Media Integration: Integrates with social media platforms for enhanced sharing.

- Google Analytics: Integrates with Google Analytics for better tracking and insights.

Usage

SEOPress is user-friendly and suitable for users of all skill levels. It offers a free version with essential features and a premium version with advanced capabilities.

5. The SEO Framework

Overview

The SEO Framework is a lightweight and efficient SEO plugin that focuses on providing essential SEO features without any bloat. It is designed to be fast and easy to use.

Key Features

- Automated SEO: Automatically optimizes your site’s SEO settings.

- XML Sitemaps: Generates XML sitemaps to help search engines index your site.

- Local SEO: Includes features for local SEO optimisation.

- Social Integration: Supports social media integration for better sharing.

- Schema Markup: Adds schema markup to improve search engine understanding.

Usage

The SEO Framework is ideal for users who prefer a simple and straightforward SEO solution. It is easy to set up and use, making it suitable for beginners and advanced users alike.

6. Broken Link Checker

Overview

Broken Link Checker is a crucial plugin for maintaining your site’s SEO health by monitoring and fixing broken links. Broken links can negatively impact your SEO, making this plugin essential for site maintenance.

Key Features

- Link Monitoring: Automatically checks for broken links in your content.

- Notifications: Notifies you when broken links are found.

- Easy Fixes: Allows you to fix broken links directly from the plugin interface.

- Customizable Settings: Offers customizable settings to control link checking frequency and types.

Usage

Broken Link Checker is a must-have plugin for any WordPress site. It helps you maintain a healthy site by ensuring that all links are working correctly, thus improving user experience and SEO.

Conclusion

Optimising your WordPress site for SEO is essential for improving your search engine rankings and driving more traffic to your site. The plugins listed above offer a range of features that can help you enhance your site’s SEO performance. Whether you are a beginner or an advanced user, these plugins provide powerful tools to help you achieve your SEO goals. By implementing these plugins, you can ensure that your WordPress site is well-optimised and ready to rank higher in search engine results.